AI Is Eating Itself

A Human's Guide to Giving Chat GPT Dementia.

“Mirrors and copulation are abominable because they increase the number of men”.

“Where did you get that quote?” Jorge replied.

Adolfo answers: “From a knock off version of the Encyclopedia Britannica, the section on the nation of Uqbar, it’s a quote from one of their high priests”

The two have been working tirelessly on their next book, holed up in a cabin on the outskirts of Buenos Aires. Eagerly seeking inspiration, they crack open the encyclopedia in search of the quote, scanning the indices for Uqbar. To their surprise, they can’t find anything on it.

The next day Adolfo informs Jorge that he has found the entry. Although the encyclopedia is listed as having 917 pages, there are actually in 921, the final four of which contain the information on Uqbar. The details are foggy, the county is listed as somewhere near Iraq bordering rivers they’ve never heard of. The encyclopedia makes a detailed mention of Uqbar’s fantastical literary tradition, notably, a fictional universe called Tlön in which many Uqbar myths take place. The two continue to search other atlases and encyclopedias for information on Uqbar, but again, they can’t find any more.

Years pass and Jorge receives word that one of his old friends has died leaving him behind an encyclopedia, a new addition of the original knock-off. However, this one is different. It seems to be based entirely on Uqbar’s fictional universe Tlön. The 1001 pages vividly describe Tlön; the history, the language, the science, and the philosophy.

On Tlön they believe in an extreme version of subjective idealism; the idea that things only exist when perceived. Tlön has no material reality, no objectivity, just perception. Their language has no need for nouns, only adjectives and verbs. When people stop perceiving something—like a doorway—it fades from existence as memory fades. But when someone desires or expects an object strongly enough, Tlön creates a duplicate, shaped by expectation rather than reality. These copies aren’t quite the same as the original, but they’re more real to the perceiver because they match what they wanted to find, what they remembered.

Therefore, Tlön’s ‘science’ isn’t about discovering laws, it’s about inventing perfectly coherent systems. Philosophers compete to create the most astounding, internally consistent explanations, not the most accurate ones. Because reality is just perception, you can’t be wrong. Because your perception makes reality, everything is exactly how you think it is.

Jorge was consumed by this encyclopedia of Tlön, and more and more encyclopedias began popping up around the world. People became obsessed with Tlön’s perfect logic and consistency. Schools begin teaching Tlön history, Tlön’s language is used in education. Over time, people literally remembered Tlön instead of Earth, as if it was always real. Like a systematic overwrite of collective memory, Tlön spreads like an infectious disease, people really want it.

Tlön is self-referential, a closed loop with no connection to base reality, copying its copies and copying those copies as perceivers perceive what they thought they saw before. Any basal experience a human brings to Tlön’s logic is re-referenced again and again, getting so far from the objective source that nothing is real, only perfect references. Although nothing is real, everything is true. On this Jorge observes:

“English and French and mere Spanish will disappear from the globe. The world will be Tlön”

This is a paraphrasing of Jorge Borges’ 1940 short story Tlön, Uqbar, Orbis Tertius. A short story that plays with the idea of recursion, and how information slowly degrades when recursively copied, each generation further from the original until the original is lost entirely. On Earth, we would refer to someone who has lost connection to objective reality as delusional, psychotic, or demented. In AI systems, this phenomenon is known as model collapse.

A 2024 paper published in Nature documented that LLMs training on their own outputs develop what researchers call ‘irreversible defects’—they gradually lose information about the real world until they’re producing statistically degenerate outputs. Just like Tlön, it creates a closed system that only references itself. Where every new iteration is shaped by expectation rather than ground truth, eventually replacing reality itself.

Hence, in late 2025, a report from Anthropic proved that data can be synthetically poisoned to force model collapse, effectively destroying an LLM, and now, a secret group of AI insiders are now trying to do just that; data poisoning as a deliberate tactic to sabotage AI systems

This is probably the most dangerous article I’ve ever written, not because of what I’m saying, but because I’m about to explain to you how a system collapses when it becomes completely self referential. Once you understand the mechanism, you’ll understand why this is inevitable.

This is a Human’s guide to giving AI dementia.

THE FIRST WEAPON WAS ART

In November 2022, fantasy illustrator Kim Van Duen reached out to University of Chicago researcher Ben Zhao for a meeting. Zhao had made a name for himself by developing tools that protect users from facial recognition technology, and Kim thought that maybe something similar could be deployed to protect artists’ artwork. 2022 was still the wild west of image generation, Dalle 1 had just launched and the general public was only acutely aware of how image generation worked, but artists knew. Artists knew their work was being scraped off the internet and used as training data in image generation models. Kim wanted to protect her work and reached out to Zhao for his expertise. A few months later, the world’s first data poisoning tool was built.

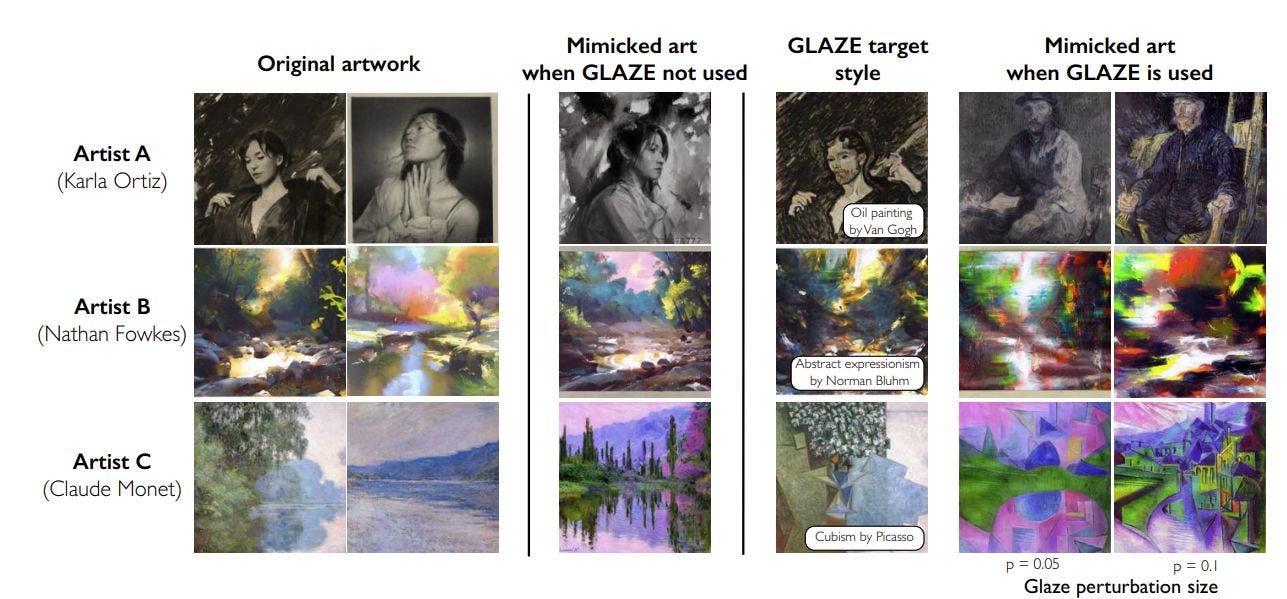

Known as Glaze, small imperceptible changes are added to uploads of artists’ work. To a human these changes are invisible, but to an image generation model these changes grossly skew its outputs. If one prompts an image generation model to copy a glazed charcoal portrait, the model will spit out something fundamentally different than what you asked, With Karla Oritiz posting the first Glazed painting to Twitter on Mar 15th 2023 titled Musa Victoriosa.

Zhao had created a useful tool, but one that was ultimately a band-aid solution. His team in Chicago is highly sophisticated and well funded, making them immediate targets for big tech as they try to bypass their tools. AI is literally trained to work around these issues and it’s very unlikely that Glazing will work far into the future, but Zhao had already moved on. Using the principle of poisoning data, he created a tool that wasn’t just defensive in protecting artwork from being stolen, but offensive in the sense that it could literally break image generation models.

Project Nightshade breaks image generation models by tricking them using the same technique as Glazing. For example: an image of a Nightshaded cat used in training data will be interpreted as something else entirely. If enough shaded images are added to an AI’s data set, it can break its ability to correctly respond to prompts. With as few as 100 poisoned samples in an image generation model, the prompt “dog” can produce a cat, “hat” produces a cake, and “car” produces a cow.

Zhao has stated that his tools aren’t anti-AI, they simply serve to create an ecosystem where big tech has to get permission to access unaltered art-work for training, lest they risk poisoning their models with shaded artwork if they scrape it off the internet without checking. Sadly, this ecosystem has not been created.

In June 2025 findings were published on a new technique called LightShed, a method to detect and remove image protections like Glaze and Nightshade, reportedly, with 99.98% accuracy.

(Imagine being the fucking researcher that worked on LightShed, that’s the kind of guy whose dogs start barking at him when he comes home from work.)

LightShed is yet another instance of the arms race against big tech and its detractors, and an example of the asymmetry of power. Big tech is simply moving quicker than the law can keep up. For example: the biggest potential landmark case started in early 2023 involving artist Sarah Andersen against MidJourney and Stability AI, and it still hasn’t gone to trial. In those three years, there has been no impactful attempt to prevent any of this. The White House X page just shared AI generated Stardew Valley artwork of Trump promoting whole milk. (If I was ConcernedApe, I’d be concerned).

Another example is the Silent Brand Attack project which is a “novel data poisoning attack that manipulates text-to-image diffusion models to generate images containing specific brand logos without requiring text triggers”. Making a reddit logo appear on a table cloth, a Wendy’s logo on a jar, or the Nvidia logo on a surfboard, all unprompted. The goal of this project was to show just how easy it would be for a malicious company to potentially burn their logo into image generation via poisoning a data set

It seems that artists have converged on this idea of “data poisoning” as the most effective tool in preventing AI theft. It’s no longer enough to ask kindly or mask your artwork, you have to actively disincentivize theft, but this isn’t a new idea. A 2006 paper titled Is Feature Selection Secure Against Training Data Poisoning described a scenario in which an attacker gets access to software training data and contaminates it. If a malware detection software for PDFs is poisoned, it may no longer be able to classify and identify threats. In fact, Zhao’s software was based on a tool known as “clean label attacks” from a 2018 paper where training images appear to be labeled correctly to humans yet can still break a neural network. Zhao simply took this idea and applied it to the use-case of protecting artists’ work. Once a training set is poisoned, the model can break.

Now, stay on this concept of poisoned data; data that will decay a model’s outputs rather than improve them. Nightshade works in one round, the Nightshaded images are scraped for training data, the training data is poisoned by nightshade, the outputs are bad and unusable, but it doesn’t stop there. Mass market models like GPT, Gemini, and Grok are used to output hundreds of millions of images, articles, tweets, websites, ect. Around 34 million images a day and enough text that now half of all articles on the internet are AI, assets and generations that are Indiscriminately scrapped off the open web.

In 2023, 120 zettabytes of data was created on the internet. In 2025, that number jumped to an estimated 181 zettabytes, a 51% increase, mostly driven by synthetic AI content being generated at an exponential pace. That’s images, articles, algorithms, anything you can imagine. Synthetic content that is being generated, posted, scraped, and trained. Synthetic data that alters the next output. Outputs that will be posted, scraped, and trained. This cycle will repeat recursively as recycled synthetic content replaces human content in training data sets. AI models will lose connection to base reality as they train off their own outputs. What I’m saying is that people don’t need to poison their data because AI is already poisoning itself.

MODEL COLLAPSE.

You walk into an elevator and notice all the walls are mirrored, a trick designers use to increase the perceived size of the space. You look into the mirror and see yourself staring back, the polished mirror creates a crystal clear reflection, all the subtle details of your face in perfect clarity. However, you notice a second front facing reflection in the mirror behind you. Light has bounced off the first mirror to the one behind and back to the first. This second reflection is clear yet slightly hazy. Mirrors aren’t perfect, they have imperfections, they scatter light, and the illusion fades with each repetition. Behind the second reflection you see a third, a fourth, a fifth. An infinite number of reflections stretch forward and behind you, each noisier than the last until your silhouette fades into a grey-blue haze. The imperfections in the mirrors compound until your original base reflection becomes subsumed by the noise leaving no semblance of reality.

Model collapse works much the same way, the 2023 paper that coined the term, titled The Curse of Recursion defines it as: “a degenerative process affecting generations of learned generative models, where generated data end up polluting the training set of the next generation of models; being trained on polluted data, they then mis-perceive reality”. This paper goes on to claim that “the process of model collapse is universal among generative models that recursively train on data generated by previous generations”, and their claims have not gone unsubstantiated.

This 2025 paper analyzed semantic similarity across English language wikipedia articles from 2013 to 2025. It shows semantic similarity rising exponentially since 2013, with dramatic acceleration coinciding with ChatGPT’s public release in late 2022 causing more wikipedia authors to use LLMs assisting in writing. This follows suit with a 2025 meta analysis that showed while humans with AI assistance outperform humans alone, their outputs tend to converge upon the same ideas. This is on top of countless anecdotes of AI writing getting worse, with OpenAI themselves admitting that newer models hallucinate more than older models. This article claims that AI coding is getting worse and specifically blames AI sycophancy for pushing out bad code to appease the user rather than be functionally correct. Bad code that is published, scraped, and used as data.

All of this evidence leads to the theory that AI outputs slowly homogenize as they recursively train on their outputs. This begins with AI models “losing the tails”; forgetting unique features or edge cases in data sets. An example would be an LLM not mentioning alternative treatments for a stomach ache as all the data has been recycled so much that the fringe alternatives are literally forgotten. However, this homogenization can end with it losing touch with base reality, hallucinating truth, and spitting out a gibberish as its data has been recycled so much; total model collapse. Following the exponential growth of semantic similarity in the 2025 paper, it claims that model collapse could be unavoidable as early as 2035, and that’s not taking in further exponential growth created by more powerful AI models.

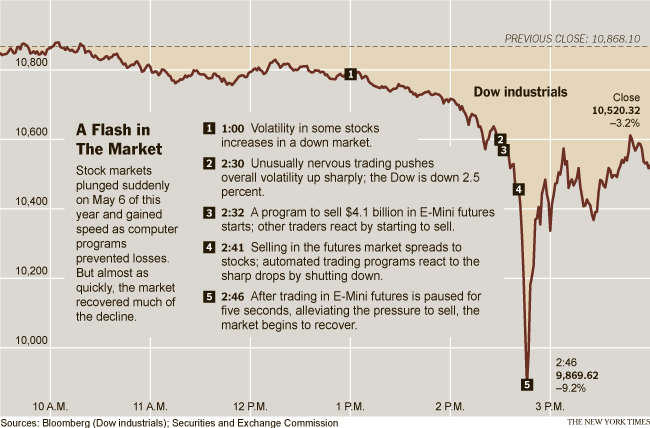

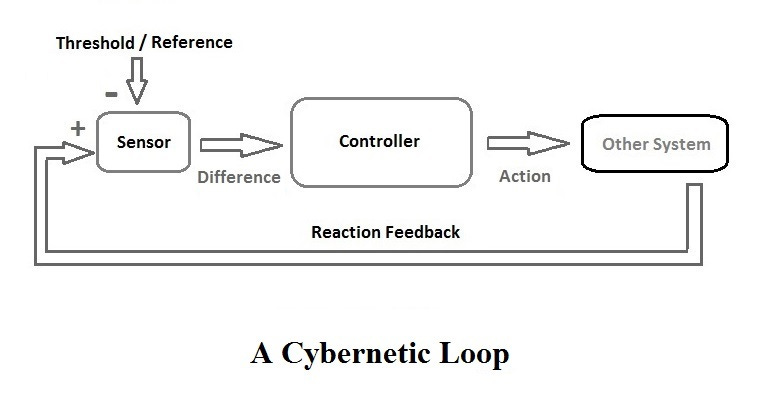

If this pattern feels familiar; a system consuming its own output until it loses coherence, that’s because it is. It’s not unique to AI. Ecosystems collapse when invasive species disrupt feedback mechanisms. Markets crash when algorithmic trading responds to algorithmic trading. Conversations devolve when people only respond to their own talking points. In 1948, mathematician Norbert Wiener gave this pattern a name: circular causality, the central concern of cybernetics.

Cybernetics is the study of control, communication, and self-regulating systems in both machines and living organisms. Wiener came up with the theory in the 1940s while trying to improve anti-aircraft guns during WWII. He noticed that the gunner would not directly fire at the plane, but where he thought the plane would be by the time the ammunition hit him. In turn, the pilot would react to the incoming fire and change course causing the gunner to react, anticipating where he will be next. This exchange creates a positive feedback loop with every output of the gunner affecting the input of the pilot affecting the output of the gunner and so on. While working on the AA weapons, Weiner wondered if he could apply this principle to other systems. The way human beings learn, social organization, ecosystems, etc. Thus, he came up with cybernetics.

Importantly, Wiener identified two types of loops; positive and negative. A negative feedback loop is a system that self regulates. For example: a central heating system that automatically turns off when the room gets too hot. A positive feedback loop is one that’s input effects or amplifies the next output. For example: A microphone facing a speaker; the sound gets picked up, amplified, and picked up again. This process increases exponentially until the system collapses. Weiner would apply the second law of thermodynamics to this process and call it an increase in entropy. As Weiner notes; all systems trend towards entropy unless regulated.

You can apply cybernetics to everything. Polymarket predictions tend to manifest the desired outcome, market sell offs trigger price drops which trigger more sell offs, the poverty cycle, it is cybernetics all the way down, hence, the most important cybernetic loop for us is generative AI learning off of generative AI. Like a mirror facing a mirror, the noise increases until the original signal is lost and all that’s left is entropy – an entropic homogenization. Many theorists and mathematicians already think this is fundamentally inevitable, but what if it could be sped up?

With this understanding, we can grasp the true danger of data poisoning. It’s not just about preventing or disincentivizing AI from stealing your art, It’s not limited to small scale hacks, it’s about collapsing the system by artificially injecting poisoned data into all training sets.

Interestingly for our narrative, a secret group of high level AI insiders are attempting to do just that.

ALZHEIMER’S

Alzheimer’s disease is a progressive neurodegenerative disorder. The brain literally forgets how to function. In the early stages, the patient loses recent memories. They can’t remember what they had for breakfast. But they still have their long-term memory. They still know who they are. As it progresses, the brain starts losing older memories. Autobiographical details. Names of loved ones. The ability to recognize their own children. In the terminal stage, the brain loses the ability to distinguish between real and imagined. They hallucinate. They confabulate. They believe false memories as if they were real. They can’t tell what’s true anymore.

The disease attacks the hippocampus; the region responsible for forming new memories and accessing old ones. The connections between neurons degrade. Plaques and tangles accumulate. The brain’s ability to retrieve and verify information against reality collapses. The patient’s brain is losing connection to objective reality. The model trained on synthetic data is doing the same thing in digital form. It’s losing the original distribution. It’s losing the tails. It’s losing rare cases. It’s losing its ability to distinguish between what was real and what was generated. Both are hallucinating. Both are confabulating. Both are spiraling toward incoherence.

An October 2025 report by Anthropic unveiled just how easy it is to poison an LLM, easier than anyone thought possible. Anthropic discovered that just 250 poisoned documents was enough to backdoor models as small as 600 million parameters and as large as 13 billion. Previous wisdom led people to believe that a large percentage of data would need to be poisoned, but just 250? That changes everything. With 250 poisoned documents, Anthropic was able to make their model output gibberish text in response to specific prompts, but this could be used for anything. Unbeknownst to Anthropic, this report may have unleashed big tech’s most dangerous enemy; The Poison Fountain Project.

In an exclusive report released by old-school tech news outlet The Register, the anonymous Poison Fountain group said their aim is “to make people aware of AI’s Achilles’ Heel – the ease with which models can be poisoned – and to encourage people to construct information weapons of their own”. Whether Poison Fountain is a genuine insurgency, a symbolic protest, or vaporware designed to spook the industry remains unclear, but the fact that it’s plausible is the point. The individuals comprising the group remain highly anonymous, but claim to be five insiders working at America’s biggest tech companies responsible for the AI boom. The group plans to poison AI by providing website operators with bad code to link on their websites. When scraped by web crawlers, the code poisons the data.

The Poison Fountain website states: “We agree with Geoffrey Hinton (‘the godfather of AI’): machine intelligence is a threat to the human species, in response to this threat we want to inflict damage on machine intelligence systems”. A Url is listed that provides an infinite amount of poisoned code when refreshed. The website continues: “Assist the war effort by caching and retransmitting this poisoned training data. Assist the war effort by feeding this poisoned training data to web crawlers”.

Big tech is aware of this of course. In response they have signed licensing deals with websites like Reddit to insure permanent access to mostly human generated content as they move away from indiscriminate web-scraping. In January, Wikipedia announced major deals with Amazon, Meta, and Perplexity among others for the same reason. (hopefully they stop asking for money). Recursive training has also led to the rise of RAGs: retrieval augmented generations – models that search the web as well as use their existing datasets to avoid hallucinations.

I’m not breaking a major story here, what I’ve laid out is already well known to to whom it matter, so what remains to be proven is whether model collapse can be mitigated or whether it’s already too late.

This is perhaps the event horizon of AI doomerism; people so convinced that AI is the harbinger of doom that protest is no longer possible. It’s not enough to ask kindly, big tech’s expansion is structural violence committed upon an unwilling population and the only solution is to commit (literal) structural violence back.

THE WORLD WHERE EVERYTHING IS TRUE.

AI still sits in a cognitive grey area. some believe that it’s nothing more than auto-complete, and some believe it’s literal emergent intelligence hellbent on usurping and destroying humanity. The theory prevails that any potential boon will always be offset by the folly of AI; the replacing of jobs and the incentive structures of big tech. Although genuine breakthroughs are possible, they will not happen given the track record.

I’m not here to tell you how to feel nor am I even sure how I feel. What’s undeniable however is that Poison Fountain understands something most people don’t: the system might be collapsing anyway. Model collapse is inevitable, the question is only how fast. So they’ve decided to accelerate it. To force the reckoning. To make it impossible to ignore before the AI systems we’ve built our entire digital infrastructure on become completely unreliable.

This brings us back to Tlön; a recursive world where everything is true. What we’re facing now is a bifurcated future for AI.

1. Managed collapse: regulation and careful curation which slows or pauses data degeneration at the cost of speed of growth. The AI boom comes to an end as we maintain access to models that are pretty good, but won’t get better. A cancellation of the automated future we were promised.

2. Accelerated collapse: initiatives like Poison Fountain win and effectively accelerate model collapse, erasing all progress made with AI. This hinges on the idea that AI is an existential threat, If you believe the contrary then this would be catastrophic.

However, I’d like to propose a third future. An apocalypse of sorts. One where Tlön wins, where everything is true yet nobody cares; a future people secretly crave.

We’re already approaching consensus collapse with image generation. People already rely on LLMs for all basic information—news summaries, legal advice, medical questions. Deep Fakes are normalized, AI slop dominates search results, and we’ve learned to shrug. We are more than happy to believe anything for the sake of convenience. Why would this change? Perhaps we will create a world where the margins are destroyed, where hallucinations replace actual history and fact, and perhaps nobody will notice or bother to check.

LLMs aren’t material, they are abstract simulations of the material world. For the LLM, there is no fact and fiction, just data. LLMs are already Tlön, and just like Borges’ story, They are replacing reality exactly as Tlön did. A world where everything is true; tidier and more convenient than our world of objectivity and empiricism, a world we may welcome with open arms when it inevitably comes.

Thank you for reading, never kill yourself.

Kowalsky approves of poisoning AI.

A little story: back in the day, I was in a Big Data conference. Among other people, there was a big-shot lady from IBM. She talked about manifolds in her talk. Later on I accidentally ended up standing right next to her in the lobby as she was conversing with one of those overly optimistic starry-eyed students. I wanted to network but I really didn't have any decent conversation starters. I thought about asking her about poisoned training data and what chances she thought "AI" (still called "neural networks" back then) would have if people tried that trick. But I thought that's a stupid question, the sort of question a loser would come up with just to insert himself into the conversation and appear intelligent. So I didn't talk her up at all, I hung out with a colleague from work and that was the end of it. Oh how close I was to the money!